5 Factors in HVACD Design for Data Centers

Data centers typically are high-density enclosed spaces that produce and distribute a significant amount of heat in a small area. This is due to the fact that the power they require to operate is dissipated into the air as heat. This heat needs to be removed or the temperature within the data center will rise, and if the temperature rises enough, the equipment can be seriously impacted. Long-term results end in equipment failure.

Traditional comfort cooling systems often cannot remove enough heat and there is a critical need to house dedicated units with precision cooling capabilities. But not just any cooling system will do the trick. There are many things to consider when designing an HVACD system for a data center that ensures it maintains a regulated temperature, is sustainable, and continues to help the data center perform its functions optimally.

The HVACD system is managed by the air conditioning system, which is influenced by 5 main factors:

- Type of IT equipment

- IT equipment configuration

- Service levels

- Costs and budget

- Energy efficiency and green standards

IT Equipment

The type of IT equipment has a huge bearing on the choice of cooling infrastructure. There are two types of common servers used: 1.) rack-mount servers, and 2.) blade servers. Both have their benefits and limitations.

Rack-Mounted Servers

Rack-mounted servers are contained in a horizontal case “1U to 5U” in height. ‘U’ is a measurement of rack height that equals 1.75” (44.45 mm).

- Offers an efficient use of floor space

- Easier management of cables and servers

- Flexibility to use servers from different manufacturers

- Hold more memory, more CPUs and more input/ output (I/O)

Limitations: Communications have to travel to the top of rack or edge network device before it can travel back.

Blade Servers

Blade servers are plug-and-play processing units with shared power feeds, power supplies, fans, cabling and storage.

- Use less space, so multiple servers can be located in the same area

- Servers sharing the same space and resources means utilizing power and cooling elements more efficiently

- Reduced data center floor space requirements

Limitations: Blade servers can raise power densities above 15 kW per rack, dramatically increasing data center heat levels.

Equipment Configuration & Layout

The flow of air through the servers is important for effective heat dissipation. It is affected by many variables, including: The cabinet and door construction, cabinet size, and thermal dissipation of any other components within the cabinet.

For example, the flow of air may be obstructed when one server is positioned so that its hot exhaust air is directed into the intake air of another server, thus preheating the intake air of the second server.

Equipment Configuration & Layout

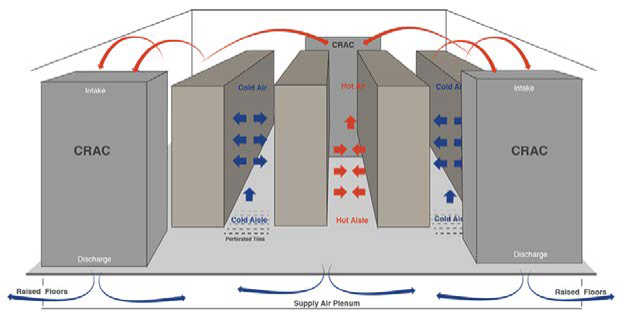

HVACD equipment location and system design is also important. ASHRAE recommends laying out equipment racks according to the Hot Aisle–Cold Aisle layout, which means aligning IT equipment racks in rows, such that the cabinet fronts face the cool aisle, and exhaust is directed to the hot aisle.

This type of design minimizes hot and cold air mixing and maximizes the heat dissipation.

Cooling System Configuration

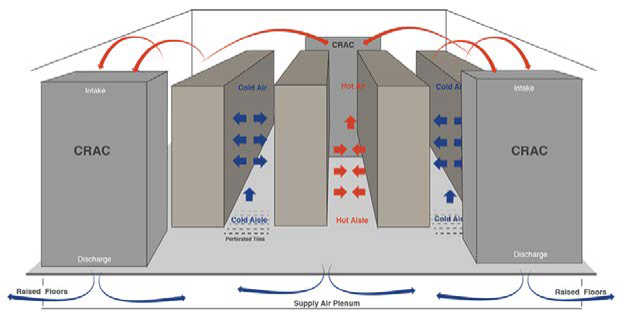

The most common design configuration is the underfloor supply system that relies on a raised floor with air conditioning equipment’s Computer Room Air Conditioning (CRAC units) located around the perimeter of the room.

This model on the following page has the following functionalities:

- CRAC units pump cold air into the floor void.

- Hot air escapes through the back of the rack into the hot aisle where it returns the air

- Vents in the floor deliver cold air to the CRAC unit. to the front of the racks facing each other (cold aisle).

Temperature and humidity sensors should be installed to monitor the temperature and humidity of the server room or data center to prevent server damage.

The placement of racks is very important. If you set up your room in the wrong layout, i.e. all racks facing the same direction, the hot air from the back of one rack will be sucked into the front of the racks in the next row, causing it to overheat and eventually fail.

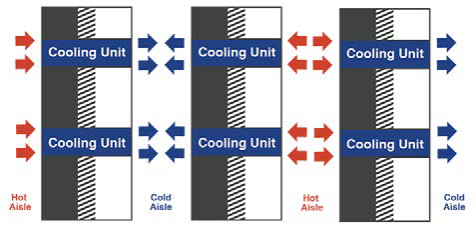

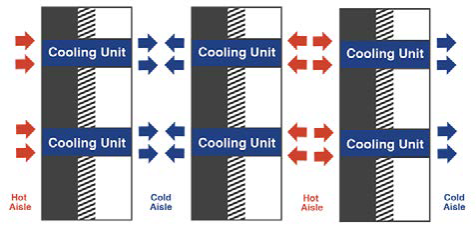

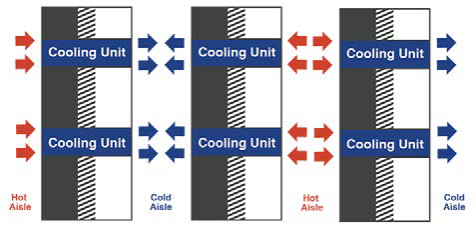

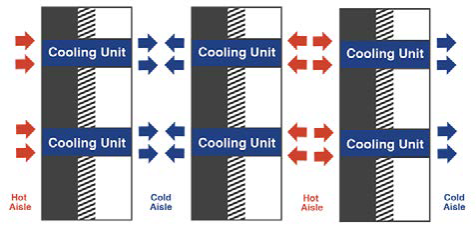

In-row cooling utilizes cooling units to be mounted directly within the rows of racks between cabinets. This configuration ensures localized cooling directly to the front of each rack and provides a very high heat dissipation capability.

When used in conjunction with containment systems, this arrangement is capable of providing at least 20 kW to 30 kW of cooling per cabinet.

Disadvantages include: Reduced reliability due to a number of potential failure points and access to a cooling unit is limited due to its location between cabinets.

Service Levels

Since data centers operate every day and all day, the cooling system must be designed to accommodate continuous heat extraction and consider redundancy into the design to continue the facility’s operation during a failure event.

Provisions for servicing and maintenance should be included in the design phase of the project, including:

- Selection of equipment with a view for durability, reliability and maintainability

- Local availability of components and stock of all long lead items

- Uninterrupted access to all HVACD components requiring servicing

- Openings for the largest size component to be delivered and installed

- Local servicing personnel trained and qualified by the computer room A/C unit manufacturer.

The Budget/Cost of a Cooling Source

Is the data center cooled using direct expansion (DX) units or chilled water?

As with all things the budget often will dictate the cooling solution adopted.

- Direct expansion (DX) systems comprise individual compressors within each of the air distribution units in the data center. The coefficient of performance (CoP) for these systems ranges between 2.5 and 4, but are generally less expensive for initial installation costs.

- Chilled-water systems offer very high energy efficiency and can operate with a CoP greater than 7. These also allow for excellent part load efficiencies. However, they are often more expensive option compared to DX.

From an operational cost, the chilled water option is much more cost effective.

From a capital expenditure point of view, the DX solution will often be the recommended option, unless you just physically don’t have enough space to fit all the IT into the available space.

Energy Efficiency & Green Standards

The data center is a big consumer of energy both for driving the IT equipment and also for the cooling system. The energy bill is often the major part of the overall operating costs.

While the energy consumed by data processing equipment is fairly constant year-round, there are some simple steps that can achieve more efficient cooling and lower the power consumption which include:

- Organize server racks into a hot and cold aisle configuration.

- Buy servers and HVACD equipment that meet Energy Star efficiency requirements.

- Group IT equipment with similar heat load densities and temperature requirements together.

- Consolidate IT system redundancies. Consider one power supply per server rack instead of providing power supplies for each server.

- Additionally, any gaps that allow unnecessary infiltration/exfiltration should be sealed and eliminated.

The Ideal Cooling Solution for Data Centers

Due to a greater demand for green and smart technologies, major innovations are finally taking place in the HVACD industry. Air conditioning has gone digital.

This digital technology not only makes air conditioning much more energy efficient, but it also makes air conditioning work better. It is able to keep the indoor environment, particularly in data centers, at a comfortable temperature, allowing for open air flows, without compromising performance and operability.

The Air2O Hybrid System

Air2O’s hybrid cooling technology can be applied within Data Centers/Server Rooms around the world, providing significant energy savings. Its ACSESS™ Control System uses smart technology, combining outdoor conditions and the established comfort zone to instantly select the most efficient cooling strategy, thus assuring the highest equipment efficiency.

As ambient conditions or comfort zone inputs change, the system will recalculate and choose the best available cooling strategy. Depending on the equipment, these strategies can include fresh air cooling (economizer), direct evaporative, indirect evaporative, IDEC, and/or DX standard air conditioning.

Data centers typically are high-density enclosed spaces that produce and distribute a significant amount of heat in a small area. This is due to the fact that the power they require to operate is dissipated into the air as heat. This heat needs to be removed or the temperature within the data center will rise, and if the temperature rises enough, the equipment can be seriously impacted. Long-term results end in equipment failure.

Traditional comfort cooling systems often cannot remove enough heat and there is a critical need to house dedicated units with precision cooling capabilities. But not just any cooling system will do the trick. There are many things to consider when designing an HVAC system for a data center that ensures it maintains a regulated temperature, is sustainable, and continues to help the data center perform its functions optimally.

The HVAC system is managed by the air conditioning system, which is influenced by 5 main factors:

- Type of IT equipment

- IT equipment configuration

- Service levels

- Costs and budget

- Energy efficiency and green standards

IT Equipment

The type of IT equipment has a huge bearing on the choice of cooling infrastructure. There are two types of common servers used: 1.) rack-mount servers, and 2.) blade servers. Both have their benefits and limitations.

Rack-Mounted Servers

Rack-mounted servers are contained in a horizontal case “1U to 5U” in height. ‘U’ is a measurement of rack height that equals 1.75” (44.45 mm).

- Offers an efficient use of floor space

- Easier management of cables and servers

- Flexibility to use servers from different manufacturers

- Hold more memory, more CPUs and more input/ output (I/O)

Limitations: Communications have to travel to the top of rack or edge network device before it can travel back.

Blade Servers

Blade servers are plug-and-play processing units with shared power feeds, power supplies, fans, cabling and storage.

- Use less space, so multiple servers can be located in the same area

- Servers sharing the same space and resources means utilizing power and cooling elements more efficiently

- Reduced data center floor space requirements

Limitations: Blade servers can raise power densities above 15 kW per rack, dramatically increasing data center heat levels.

Equipment Configuration & Layout

The flow of air through the servers is important for effective heat dissipation. It is affected by many variables, including: The cabinet and door construction, cabinet size, and thermal dissipation of any other components within the cabinet.

For example, the flow of air may be obstructed when one server is positioned so that its hot exhaust air is directed into the intake air of another server, thus preheating the intake air of the second server.

Equipment Configuration & Layout

HVAC equipment location and system design is also important. ASHRAE recommends laying out equipment racks according to the Hot Aisle–Cold Aisle layout, which means aligning IT equipment racks in rows, such that the cabinet fronts face the cool aisle, and exhaust is directed to the hot aisle.

This type of design minimizes hot and cold air mixing and maximizes the heat dissipation.

Cooling System Configuration

The most common design configuration is the underfloor supply system that relies on a raised floor with air conditioning equipment’s Computer Room Air Conditioning (CRAC units) located around the perimeter of the room.

This model on the following page has the following functionalities:

- CRAC units pump cold air into the floor void.

- Hot air escapes through the back of the rack into the hot aisle where it returns the air

- Vents in the floor deliver cold air to the CRAC unit. to the front of the racks facing each other (cold aisle).

Temperature and humidity sensors should be installed to monitor the temperature and humidity of the server room or data center to prevent server damage.

The placement of racks is very important. If you set up your room in the wrong layout, i.e. all racks facing the same direction, the hot air from the back of one rack will be sucked into the front of the racks in the next row, causing it to overheat and eventually fail.

In-row cooling utilizes cooling units to be mounted directly within the rows of racks between cabinets. This configuration ensures localized cooling directly to the front of each rack and provides a very high heat dissipation capability.

When used in conjunction with containment systems, this arrangement is capable of providing at least 20 kW to 30 kW of cooling per cabinet.

Disadvantages include: Reduced reliability due to a number of potential failure points and access to a cooling unit is limited due to its location between cabinets.

Service Levels

Since data centers operate every day and all day, the cooling system must be designed to accommodate continuous heat extraction and consider redundancy into the design to continue the facility’s operation during a failure event.

Provisions for servicing and maintenance should be included in the design phase of the project, including:

- Selection of equipment with a view for durability, reliability and maintainability

- Local availability of components and stock of all long lead items

- Uninterrupted access to all HVAC components requiring servicing

- Openings for the largest size component to be delivered and installed

- Local servicing personnel trained and qualified by the computer room A/C unit manufacturer.

The Budget/Cost of a Cooling Source

Is the data center cooled using direct expansion (DX) units or chilled water?

As with all things the budget often will dictate the cooling solution adopted.

- Direct expansion (DX) systems comprise individual compressors within each of the air distribution units in the data center. The coefficient of performance (CoP) for these systems ranges between 2.5 and 4, but are generally less expensive for initial installation costs.

- Chilled-water systems offer very high energy efficiency and can operate with a CoP greater than 7. These also allow for excellent part load efficiencies. However, they are often more expensive option compared to DX.

From an operational cost, the chilled water option is much more cost effective.

From a capital expenditure point of view, the DX solution will often be the recommended option, unless you just physically don’t have enough space to fit all the IT into the available space.

Energy Efficiency & Green Standards

The data center is a big consumer of energy both for driving the IT equipment and also for the cooling system. The energy bill is often the major part of the overall operating costs.

While the energy consumed by data processing equipment is fairly constant year-round, there are some simple steps that can achieve more efficient cooling and lower the power consumption which include:

- Organize server racks into a hot and cold aisle configuration.

- Buy servers and HVAC equipment that meet Energy Star efficiency requirements.

- Group IT equipment with similar heat load densities and temperature requirements together.

- Consolidate IT system redundancies. Consider one power supply per server rack instead of providing power supplies for each server.

- Additionally, any gaps that allow unnecessary infiltration/exfiltration should be sealed and eliminated.

The Ideal Cooling Solution for Data Centers

Due to a greater demand for green and smart technologies, major innovations are finally taking place in the HVAC industry. Air conditioning has gone digital.

This digital technology not only makes air conditioning much more energy efficient, but it also makes air conditioning work better. It is able to keep the indoor environment, particularly in data centers, at a comfortable temperature, allowing for open air flows, without compromising performance and operability.

The Air2O Hybrid System

Air2O’s hybrid cooling technology can be applied within Data Centers/Server Rooms around the world, providing significant energy savings. Its ACSESS™ Control System uses smart technology, combining outdoor conditions and the established comfort zone to instantly select the most efficient cooling strategy, thus assuring the highest equipment efficiency.

As ambient conditions or comfort zone inputs change, the system will recalculate and choose the best available cooling strategy. Depending on the equipment, these strategies can include fresh air cooling (economizer), direct evaporative, indirect evaporative, IDEC, and/or DX standard air conditioning.